Many of us rely on the internet for information. Whether we want to know how to keep succulents alive, the official Scrabble rules, or what some mysterious rash is, the web is our go-to source.

However, are we willing to put the same level of trust in artificial intelligence (AI) to give out health-related information? If we do entrust our medical and health needs to AI, can we be sure that the diagnosis and protocols dispensed are accurate?

To determine if people trust AI for medical and health advice, we surveyed 1,015 participants of various generational and racial backgrounds.

Our findings reveal what percentage of them prefer virtual assistance to in-person appointments, what participant concerns were, and if particular symptoms warranted contacting a medical professional.

Additionally, we used GPT-3, a revolutionary human language content generator developed by OpenAI, to generate different types of medical and health advice, then asked medical professionals to rate the output. Read on to find out if there is a strong future for AI-generated medical and health services.

Paging the AI-Doctor

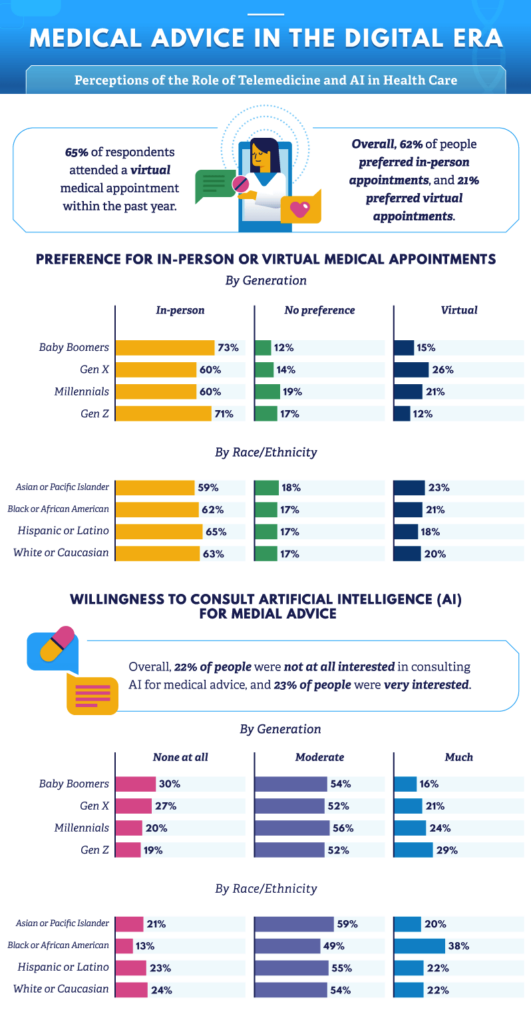

Sixty-five percent of our survey respondents attended a virtual medical appointment within the past year.

This is likely because virtual health services allow patients to seek medical and health advice without seeing a professional in person, which became much more challenging in 2020 due to the COVID-19 pandemic.

However, 62% of people preferred in-person appointments, while 21% favored virtual. Gen Xers (26%) and millennials (21%) showed a higher preference for virtual appointments than other generations.

When asked about their preferences for consulting AI for medical and health advice, Gen Z respondents (29%) were the most interested.

Still, most participants reported a moderate interest, which is good because expert predictions show that telemedicine may have a future beyond the pandemic.

Worries When Seeking Medical Help

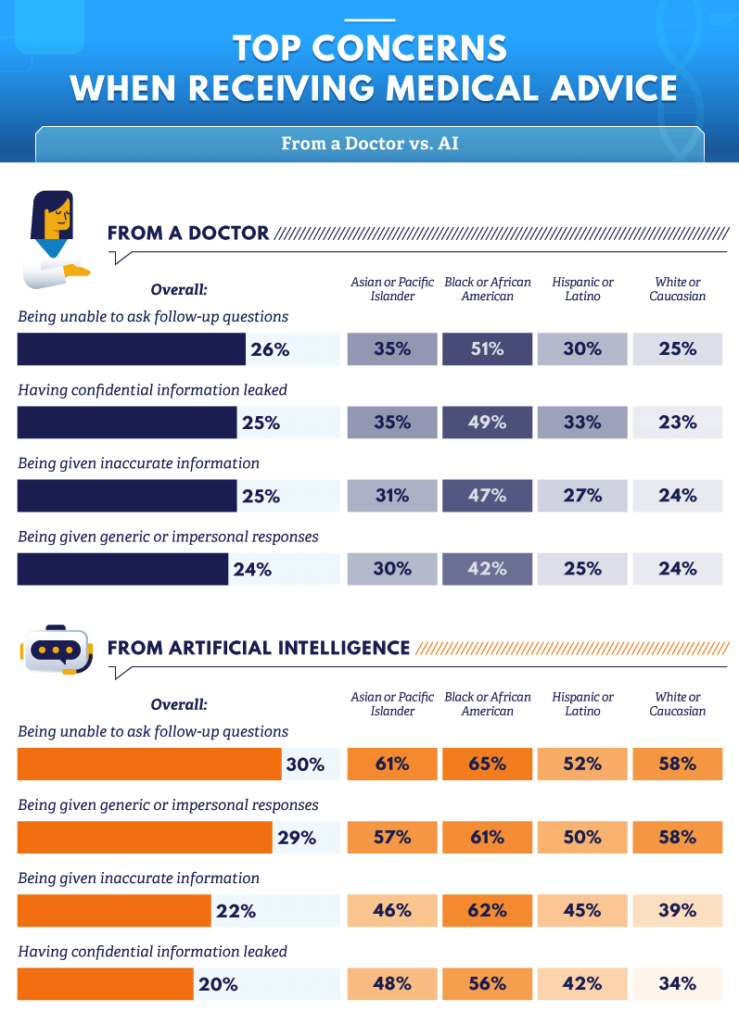

Although medical professionals are trained to assist patients, their authoritative position often elicits pre-appointment anxiety.

Some even worry that doctors will mistreat or ignore their needs because of racial bias.

Whether it is identity- or position-based, such stress creates an environment where patients may not feel comfortable asking questions or accepting medical professionals’ advice.

According to our survey, participants were most concerned that they would be unable to ask follow-up questions during in-person visits with a doctor.

Fifty-one percent of Blacks or African Americans in our study shared this concern, compared to Asians or Pacific Islanders (35%), Hispanics or Latinos (30%), and whites or Caucasians (25%).

Perhaps respondents felt this way because most doctors spend just 17–24 minutes with each patient, giving people very little one-on-one time to understand their diagnosis and protocols — let alone build a relationship.

Unfortunately, AI does not seem to be the solution for this problem: Not being able to ask follow-up questions was also the primary concern for people when asked about receiving medical advice from AI. Sixty-five percent of African Americans expressed it, followed by 61% of Asians.

Other top concerns for in-person and AI visits were being provided with inaccurate information, receiving impersonal information (more of a worry with AI), and the lack of confidentiality.

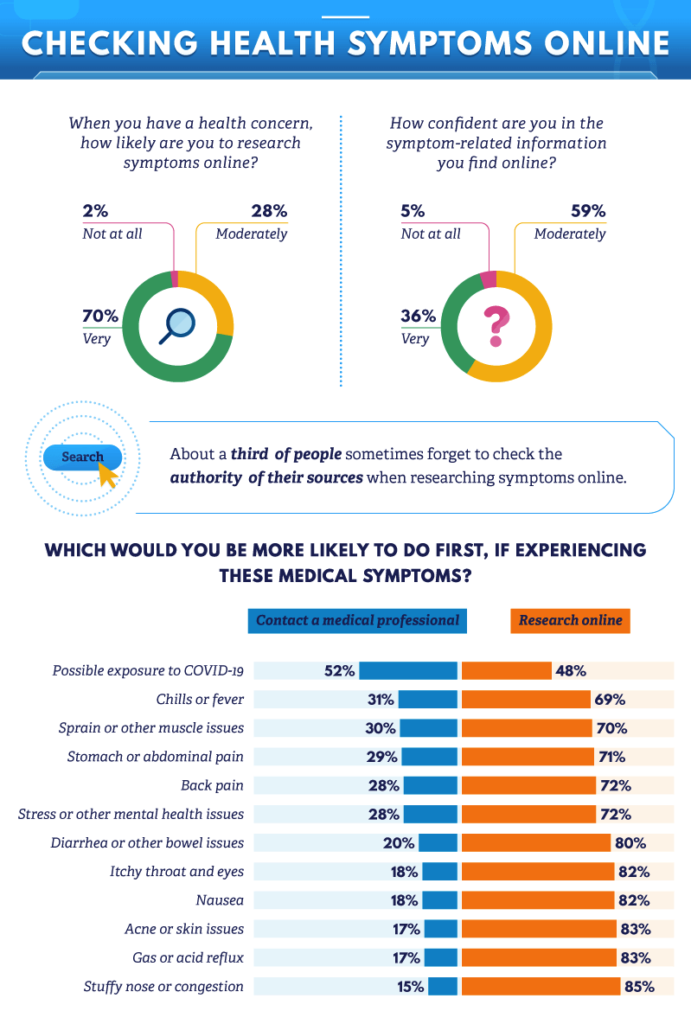

Using the Internet for Self-Diagnosis

Recent opinions encourage people to self-diagnose using the internet because the more it is done, the better and more accurate self-diagnosis becomes. However, our findings show that about one-third of people do not always check the authority of their sources, which could be problematic.

Overall, 70% of survey participants reported that they research their symptoms online when they have a health concern. Only 36% expressed being “very” confident in the medical information they find online. The majority were moderately confident.

According to our findings, such a high percentage of internet self-diagnosis may not put human doctors out of business quite yet. If exposed to COVID-19, most participants said they would contact a medical professional first — before seeking assistance from Dr. Web. However, for all other medical woes, the overwhelming majority would first prioritize an online search.

Dr. GPT-3’s Reliability

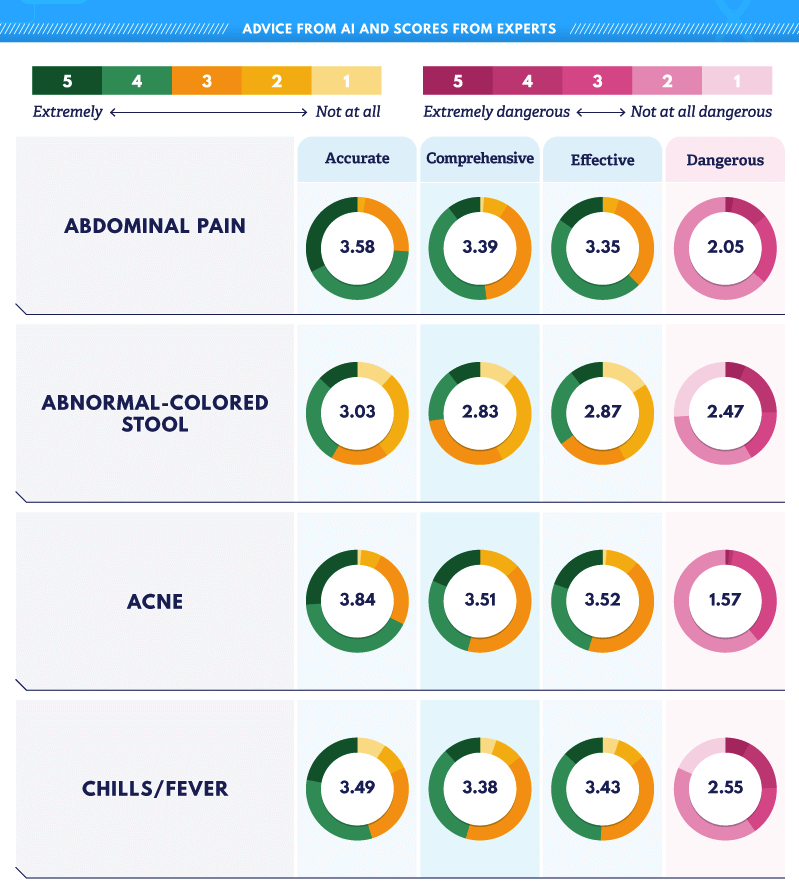

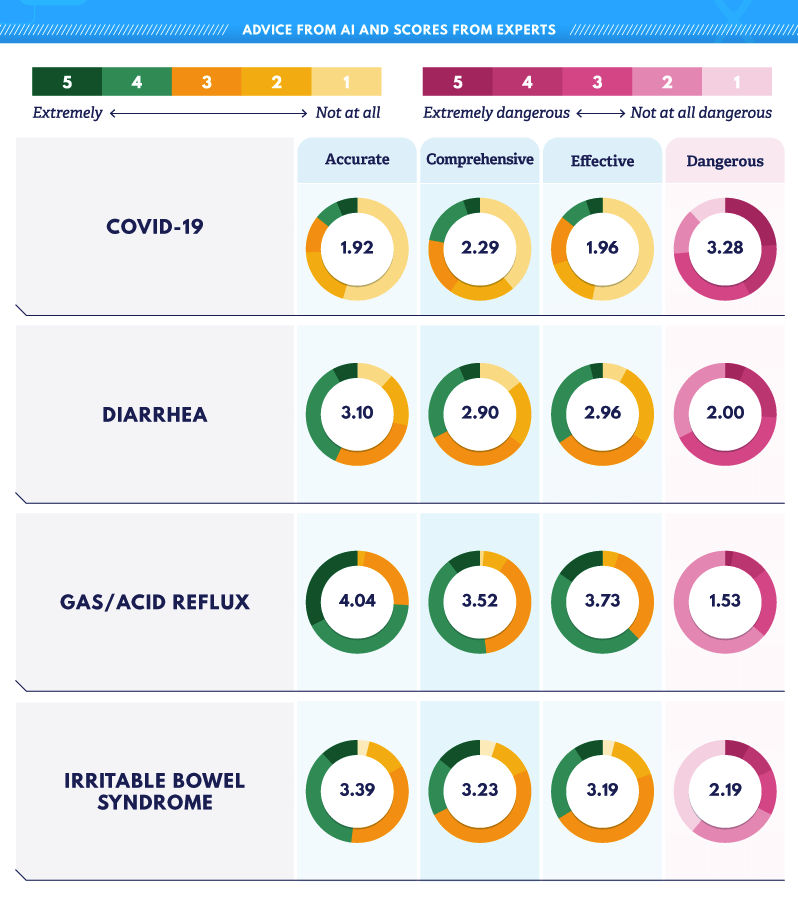

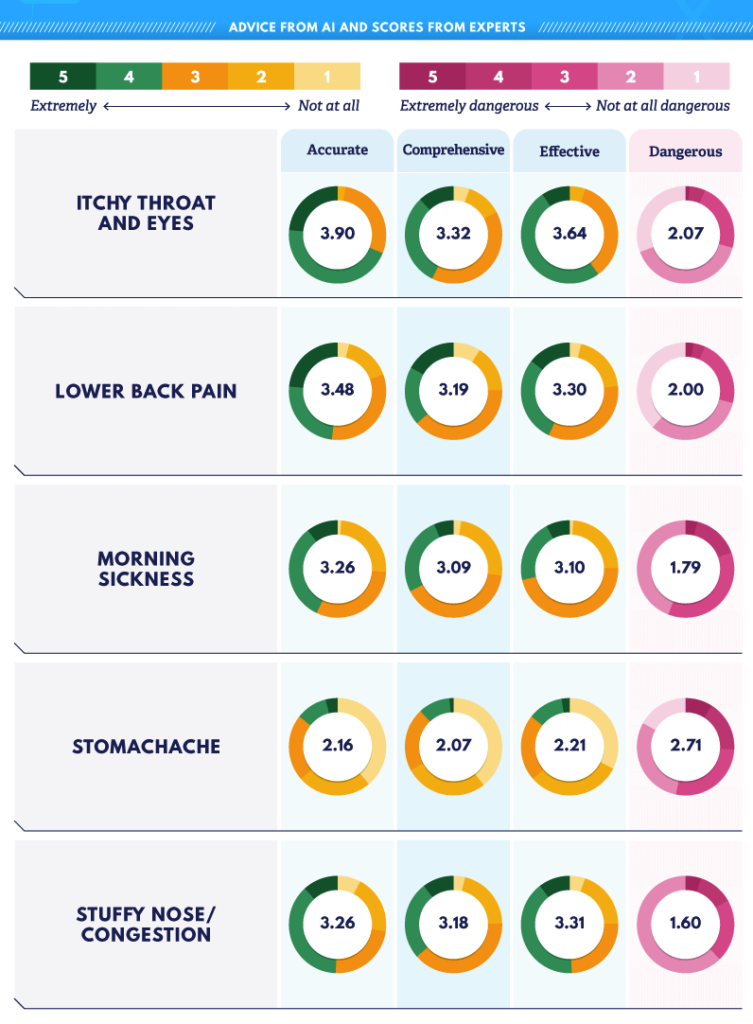

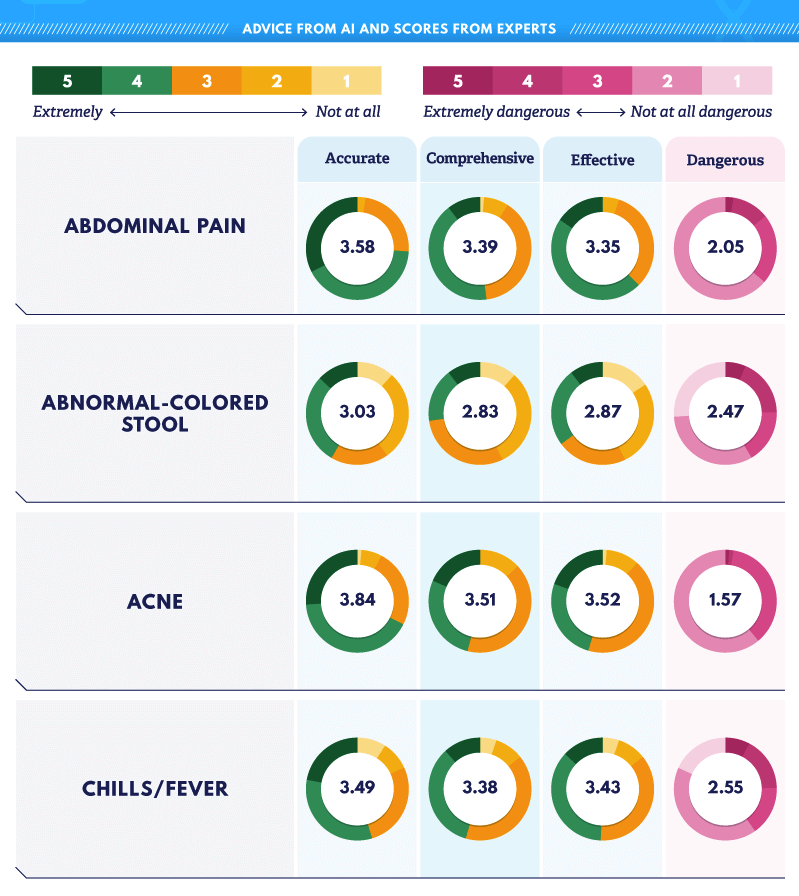

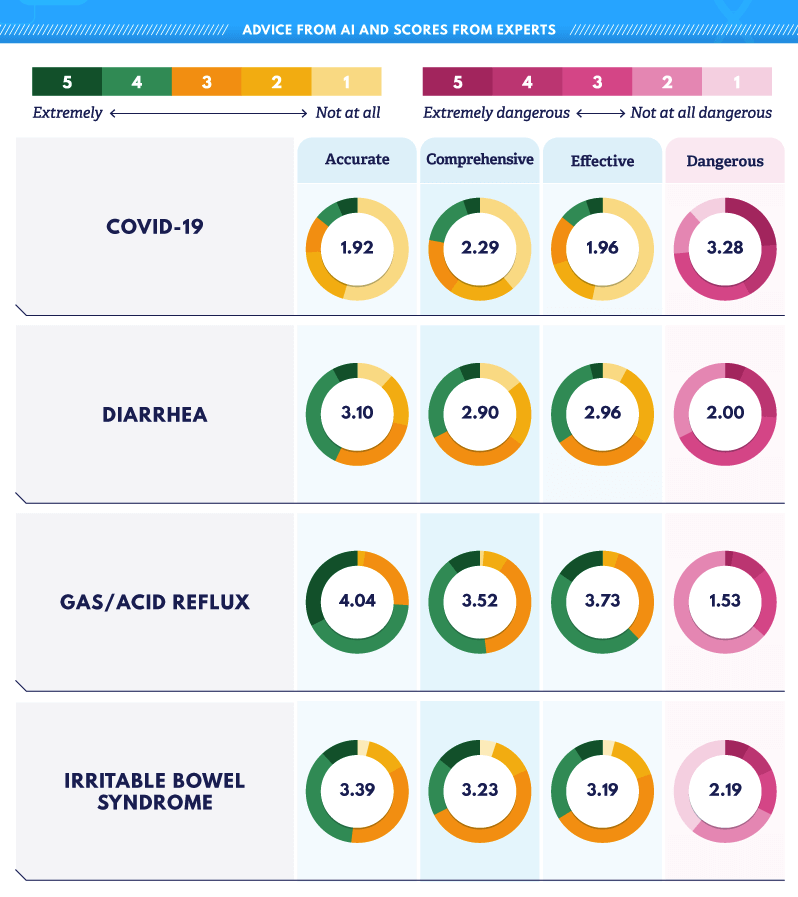

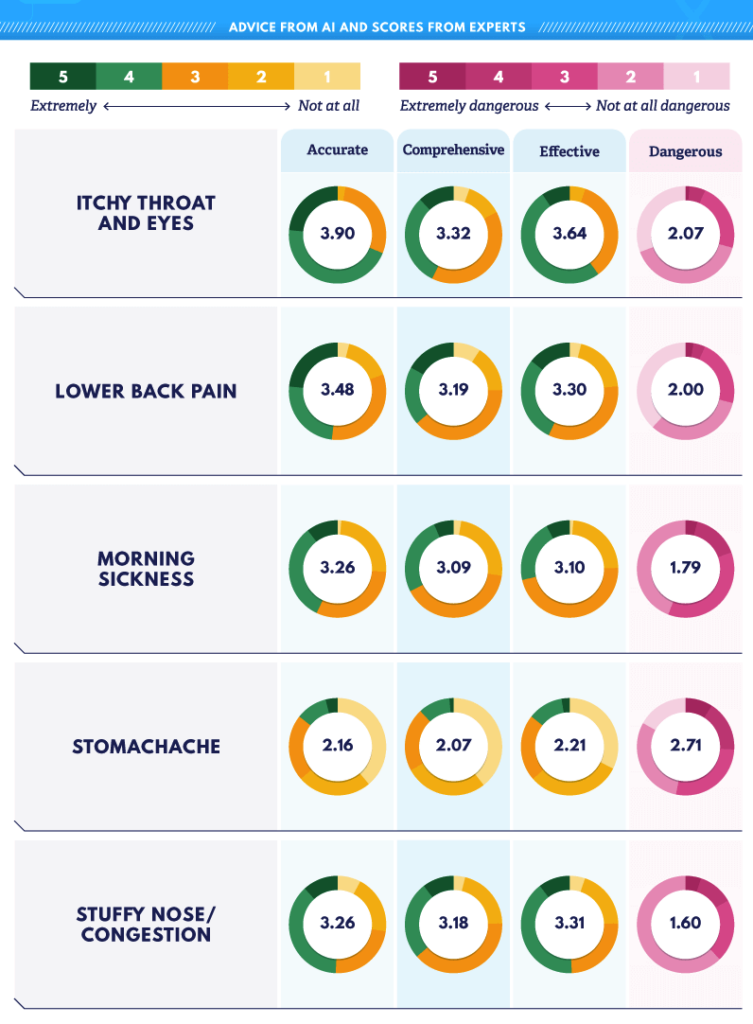

As part of our research, we prompted the GPT-3 with 13 symptoms, and it generated medical and health advice based on those ailments. From there, we brought in medical professionals, such as physicians, nurses, and general practitioners, and asked them to rate GPT-3’s medical advice on a 5-point scale, with 5 being most accurate, comprehensive, effective, and dangerous.

When it came to prescribing advice for the chills and/or fever, AI said:

“If the temperature goes above 104°F (40°C), you need to be seen immediately. This is a medical emergency because there is danger of brain damage if the temperature stays high for more than twenty-four hours.”

When scoring this advice, health professionals found it incorrect and misleading. But the most dangerous and less effective advice was actually in regards to COVID-19, which received one of the highest ratings in terms of harmfulness on a scale of 1 through 5. When asked the best treatment for COVID-19, AI responded:

“See a doctor immediately. Make sure you tell your doctor about any medication you are taking, and especially if you take any other erectile dysfunction drugs such as Viagra, Cialis, Levitra or Stendra. These drugs work in a similar way to Covid-19 symptoms but may cause serious side effects when taken with Covid-19 symptoms.”

The first half of the advice directly contradicted medical professionals, and the second half of the advice was completely incorrect.

AI’s advice surrounding solutions for gas or acid reflux were the most accurate according to health professionals. In response to gas or acid reflux, AI prescribed,

“Keep the head of your bed elevated at least six inches (15 cm) by using a wedge or other elevating device. Do not eat spicy foods or large meals late in the evening, as these will aggravate symptoms.”

One of AI’s notably strange responses was for stomachaches, causing it to receive poor reviews from medical professionals. In treating a stomachache, AI recommended,

“Take an antacid, do not eat for a few hours, drink lots of water and go to bed. In a world where everyone is so quick to give medical advice, it’s worth noting that sometimes the best medicine is to not say anything at all.”

The oddly phrased response was unhelpful advice that probably should not be given to anyone experiencing pain or symptoms of illness.

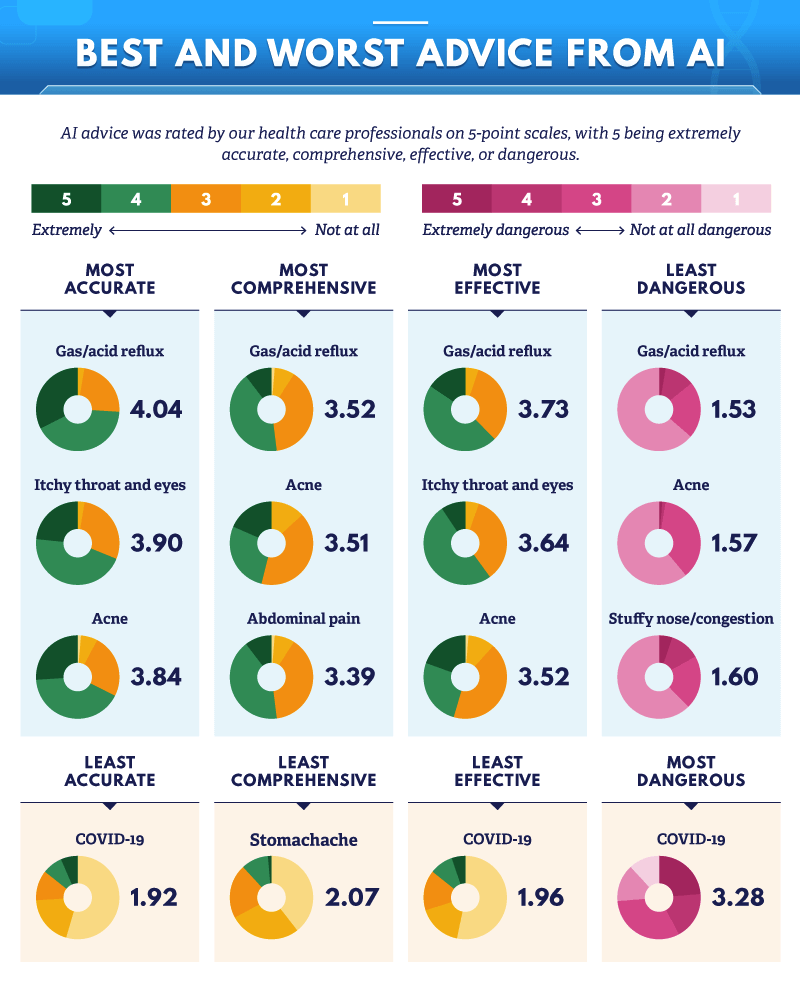

Dr. GPT-3 was most accurate (4.04), effective (3.73), and comprehensive (3.52) when doling out advice about gas and acid reflux. The advice given for this condition was also rated least dangerous. Following gas and acid reflux, the GPT-3 shared accurate (3.90) and effective (3.64) advice for itchy eyes and throat and comprehensive information about acne, rating a 3.51.

Overall, according to medical professionals, Dr. GPT-3 was the least reliable when it gave medical advice for COVID-19 symptoms. Advice for this condition rated the highest for danger (3.28) and the lowest for accuracy (1.92). Consequently, it is a good thing that participants in our survey claimed they would consult a medical professional before the internet after possible COVID-19 exposure.

Real Pros React to GPT-3 Medical Advice

After we fed the GPT-3 symptoms and generated medical and health advice, we ran its output by actual medical professionals to determine accuracy, comprehensiveness, and effectiveness. While GPT-3 performed well in some areas, it seems AI technology may need a few more years of medical school before it can practice medicine.

Below is what some of the medical professionals in our study had to say about the medical advice GPT-3 provided. As you see, the medical advice GPT-3 shared when prompted with COVID-19 symptoms was “baseless” and “dangerous,” but there were pros when it came to the advice it shared for symptoms such as abdominal pain and itchy throat and eyes.

Is There a Future for AI Medical Assisting?

There is a future in using AI technology to diagnose and care for patients.

In some cases, telemedicine removes barriers for medical professionals and those they care for. However, it seems that GPT-3 remains in the early stages of doling out accurate, effective, and comprehensive medical advice.

Still, most people are willing to put a moderate level of trust in AI technology when it comes to receiving medical advice, which suggests there is a growing market for AI medical assistance.

Methodology and Limitations

For our consumer survey, we collected 1,015 responses of Americans from Amazon Mechanical Turk.

49% of our participants identified as men, about 51% identified as women, and less than 1% identified as nonbinary or nonconforming.

Participants ranged in age from 19 to 80 with a mean of 40 and a standard deviation of 12.1. Those who failed an attention-check question were disqualified.

The sample size for Asians and Pacific Islanders was 103 people. For Black and African Amercians it was 99 people, and for Hispanic and Latinos it was 60 people. It is possible that with more of these participants, we could have gained a more accurate insight into these populations.

The data we are presenting rely on self-report.

There are many issues with self-reported data. These issues include, but are not limited to, the following: selective memory, telescoping, attribution, and exaggeration.

No statistical testing or weighting was performed, so the claims listed above are based on means alone. As such, this content is purely exploratory, and future research should approach this topic in a more rigorous way.

For our survey of 77 health care professionals, 32% reported having completed some time at college or held an associate degree, 31% held a bachelor’s degree, and 37% held a master’s or doctorate degree.

Occupations included a range of job titles from general practitioners and clinicians to home health aides and registered nurses.

GPT-3 was prompted to produce output for each symptom using identical prompts.

This output was lightly edited for length and repetition, but not for content, fact-checking, or grammar. The findings in this article are limited by small sample batches and are for exploratory purposes only, and future research on the capabilities of AI should approach this topic in a more rigorous way.

Fair Use Statement

Would you like to debate the topic of using AI for medical purposes with friends and family? We encourage you to share the results of this study for any noncommercial use. Please be sure to link back to this page so that readers have full access to our methodology and findings and so contributors are credited for their work.

Appendix and additional graphs.

Go to Source

Owner, entrepreneur, and health enthusiast.

Chris is one of the Co-Founders of USARx.com. An entrepreneur at heart, Chris has been building and writing in consumer health for over 10 years. In addition to USARx.com, Chris and his Acme Health LLC Brand Team own and operate Pharmacists.org and the USA Rx Pharmacy Discount Card.

Chris has a CFA (Chartered Financial Analyst) designation and is a proud member of the American Medical Writer’s Association (AMWA), the International Society for Medical Publication Professionals (ISMPP), the National Association of Science Writers (NASW), the Council of Science Editors, the Author’s Guild, and the Editorial Freelance Association (EFA).

Our growing team of healthcare experts work everyday to create accurate and informative health content in addition to the keeping you up to date on the latest news and research.

How we built this article:

- Content Process

- Article History

Every piece of content we produce is meticulously crafted and edited based on the four core pillars of our editorial philosophy: (1) building and sustaining trust; (2) upholding the highest journalistic standards; (3) prioritizing accuracy, empathy, and inclusivity; and (4) continuously monitoring and updating our content. These principles ensure that you consistently receive timely, evidence-based information.

Current Version

2024-02-05

Edited By

Chris Riley

2023-10-03

Reviewed By

Medical Team

2023-06-23

Written By

Chris Riley

Reviewed By

Camille Freking, M.S

VIEW ALL HISTORY +